The blog entries "Think big: Consistent process management in the insurance industry" and "Process Management: Input, identifying concerns, and extracting data" go over the significance of process management in the insurance industry. Now let’s go over these topics and describe an architectural solution pattern: Event streaming.

An explanation of the term

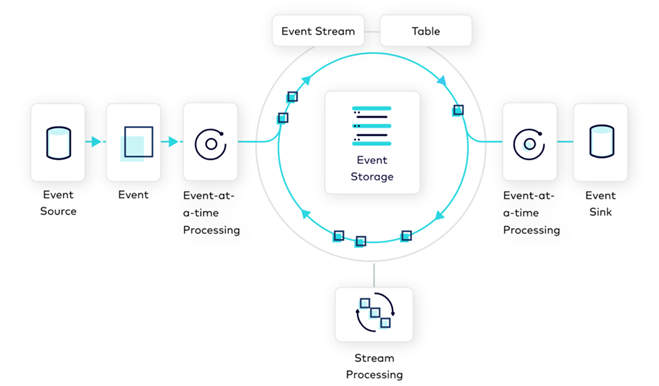

Event streaming is a programming paradigm that places data streams at the center of processing. i.e., the sequence of events over the course of time. An event is the alteration of the status of any object. In an event streaming architecture, information from various, distributed systems are continuously gathered in real time and processed by one or several brokers based on the defined stream topology. The result of this processing is a new event that is afterwards once again processed in event streaming. This architecture opens up numerous benefits that are not possible via conventional data processing (storing data in relational databases and triggered execution of inquiries). Among other things, these include:

- Real-time processing/visualization

- Loose coupling via data requires interchangeability and the possibility of small deployment units

- Nearly unlimited scaling options

- Transparency and revision safety of all processes in the system

Event streaming as a basis for a process management engine in the insurance industry

A BPMN process is essentially no different than an event stream. A business process is in state A. The processing of an event, e.g. user action or the result of an external service call, results in state B. A BPMN element is processed by a general or specific broker.

The event streaming architecture constitutes the backbone of the in|sure Workflow and Workplace products. To that end, we use Apache Kafka, which is a de facto event streaming standard together with Kafka Streams. According to their own statements, for instance, 80% of Fortune 100 companies and 10 of 10 of the largest insurance companies use Kafka.

Task Routing

Routing tasks to specialists is an ideal deployment scenario for event streaming, since the state of tasks and specialists consistently changes and real-time processing is necessary.

Scaling

Insurance companies have a basic load of process instances that is rather consistent. Furthermore, planned (e.g. monthly/annual processings) and unplanned (e.g. major loss events) load peaks occur. In this case, the scaling options that can take place during operation are of great benefit in particular. This especially applies for the case of operation in the cloud.

Real-time – KPIs

The event streaming architecture enables the evaluation and depiction of KPIs in real time by adding reporting components as an additional data consumer.

Customer extensibility

All in|sure products offer a release-capable core. Event streaming decouples processing logics, making it possible to replace every single broker with customized implementations.

Usage of data by third-party systems

Apache Kafka’s event streaming platform enables easy integration of additional data sources just as easily as the use of the existing data by additional consumers. As a result, additional added value can be created.

At adesso insurance solutions, we are convinced that the event streaming architecture is the right choice for solving the professional challenges in process management and can also create the center of a data-driven processing in the core systems of an insurer.

Do you have any questions or comments? Then please leave us a comment.