Artificial intelligence (AI) can be used for a variety of different tasks in the insurance industry, including communication with customers, claims assessments, and input management. Despite the increase in efficiency offered by AI, companies often harbor concerns regarding security standards and the handling of sensitive data.

The European Union is responding to these concerns with new legislation intended to regulate the use of AI. The Artificial Intelligence Act ("AI Act") is the first comprehensive set of rules regarding artificial intelligence. This new legislation was drafted to ensure that AI is used securely, fairly, and transparently. The AI Act is currently only a draft, with the final version still pending. On February 2, 2024, the proposal was unanimously adopted by all EU member states. It's now up to the European Parliament to grant its final approval in April. Since this granting is considered a mere formality, the AI Act is expected to take effect this spring.

Classification according to levels of risk

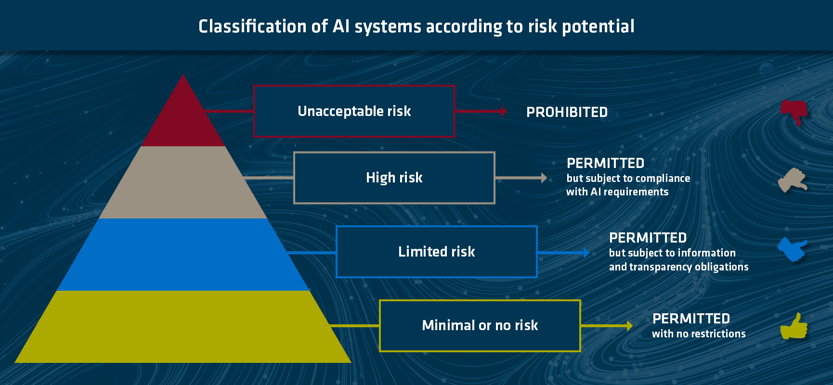

The AI Act analyzes and classifies AI applications based on their level of risk. The higher the potential risk, the stricter the requirements for providers.

In the current draft of the AI Act, there are four risk levels:

AI systems classified in the unacceptable risk category will become expressly prohibited six months after the day that the act comes into force. Among other things, these include AI systems that manipulate human behavior or make use of social scoring systems, emotion recognition technologies, or the untargeted scraping of facial images from the Internet.

AI systems are considered high-risk if they have a negative effect on the democracy, safety, or rights of human beings. These can include applications used for biometrics, critical infrastructures, candidate management, training, and procedures for granting asylum. The complete list of high-risk AI systems can be found in Annex III of the draft of the AI Act. The high-risk category is the focus of the new regulation, which also includes a variety of rules and requirements for developers and users.

According to the draft law, systems such as chatbots, search algorithms, deep fakes, video games, and spam filters pose a limited or minimal risk. These systems are regulated less stringently (or not at all), and must only meet certain obligations regarding transparency and the provision of information. In the case of a chatbot, for example, customers should be able to clearly recognize that they are communicating with AI, and an AI-generated image should inform the customers that the displayed content has been generated by a machine.

The AI Act primarily regulates future AI systems

Insurers are also affected by the new legislation. The AI Act will complement the industry's existing regulatory framework, and affect applications in the life and health insurance sector. According to Annex III of the draft law, AI systems used in life and health insurance to assess risks associated with natural persons and for pricing are classified as high-risk. These applications would therefore be subject to various requirements, the fulfillment of which may necessitate substantial amounts of time and money.

Jörg Asmussen, CEO of the German Insurance Association (GDV), disagrees with the classification. He notes that existing regulations already offer a high level of protection, and doesn't see the need for additional obligations. Among other things, the AI Act requires companies to integrate a separate risk and quality management system, as well as to create the technical documentation that can prove to the authorities that their AI systems comply with all the rules and regulations. In addition, high-risk AI systems must be monitored by a human supervisor to ensure the accuracy and validity of the results provided by said systems.

Frank Stuch, Chief Technology Evangelist at adesso insurance solutions, provided us with his take on how the new AI Act will affect the insurance industry: "The majority of insurers' IT systems will not be affected by the current EU regulation, as they are not AI systems as defined by the AI Act." Like Jörg Asmussen, he's not entirely convinced that AI-based risk assessment and pricing systems in health and life insurance should be classified as high-risk, but so far, such AI has only been implemented at a few insurance companies. "In addition, other laws and regulations already restrict or prohibit the fully automated assessment of individual cases. The EU AI Act therefore mainly regulates future AI systems rather than insurers' current IT systems," says Frank Stuch.

Insurers should by no means only see the AI Act as an additional expense. In actuality, it also represents a source of new opportunities. A uniform AI standard can potentially lead to customers embracing and having more trust in AI technology—an important factor that will allow AI systems to be successful for companies in the future.

"No excuse to postpone AI projects"

On the whole, the EU's AI Act will influence the way insurance companies use and regulate artificial intelligence. Companies must review their existing AI systems and ensure that they comply with the new regulatory requirements. And when developing new AI applications, these requirements must be taken into account from the outset.

"AI systems are an enormous source of potential for insurance companies. Insurers cannot use the AI Act as an excuse to postpone AI projects, but should familiarize themselves with the implications of the new legislation in good time," summarizes Frank Stuch. Once the AI Act comes into force (likely in May), there will be a transition period of two years for its implementation, but systems with an unacceptable risk will be prohibited after just six months. In other words, get the ball rolling!

Would you like to learn more about how AI can help with input management and more? If so, feel free to contact our expert Florian Petermann, Senior Business Developer at adesso insurance solutions.

Do you have any questions or comments? Then please leave us a comment.